Dog Go

Web Project

A webscraping application and flask webapp to display dogs available for adoption based on search parameters.

← Back to ProjectsDog Go

Summary

Dog Go is a web scraping project designed to help potential pet adopters stay informed about newly available dogs in their area. The application automatically scrapes PetFinder for the latest dog listings and presents them through a clean, user-friendly Django web interface, making it easier for users to discover adoptable dogs without manually checking the site repeatedly.

Project Motivation

Finding the perfect adoptable dog can be challenging, especially in competitive adoption markets where desirable pets are adopted quickly. Dog Go addresses this problem by automating the monitoring process, ensuring users never miss out on new listings that match their preferences. This project combines web scraping automation with a practical web interface to streamline the pet adoption discovery process.

Technical Architecture

The project is structured with clear separation between data collection and presentation components:

- Scraping Engine: Located in

src/dog_go, handles all data collection from PetFinder - Web Application: Stored in

src/dog_go_webapp, provides the Django-based user interface - Data Management: CSV-based storage system for tracking dogs over time

Data Collection Workflow

The scraping process follows a streamlined pipeline designed for efficiency and reliability:

Basic Scraping Process

When fetch_dogs.py is executed, it initiates the data collection sequence through the get_dogs() function. This function passes user-specified parameters to get_dog_cards(), which uses the Selenium library to query PetFinder's website programmatically. The resulting collection of Selenium data objects is then processed by clean_dog_cards(), which standardizes and structures the data into a clean Pandas DataFrame.

Smart Update System

For more sophisticated monitoring, the update_dogs.py script provides an enhanced workflow that includes change detection. After creating the DataFrame of current listings, the system compares this data against data/old_dogs.csv, which maintains a record of previously discovered dogs. This comparison process filters out duplicates, ensuring that only genuinely new listings are highlighted to users.

The newly identified dogs are then appended to the historical record in old_dogs.csv, creating a comprehensive database for future comparisons. This approach prevents users from seeing the same dogs repeatedly while maintaining a complete history of all discovered listings.

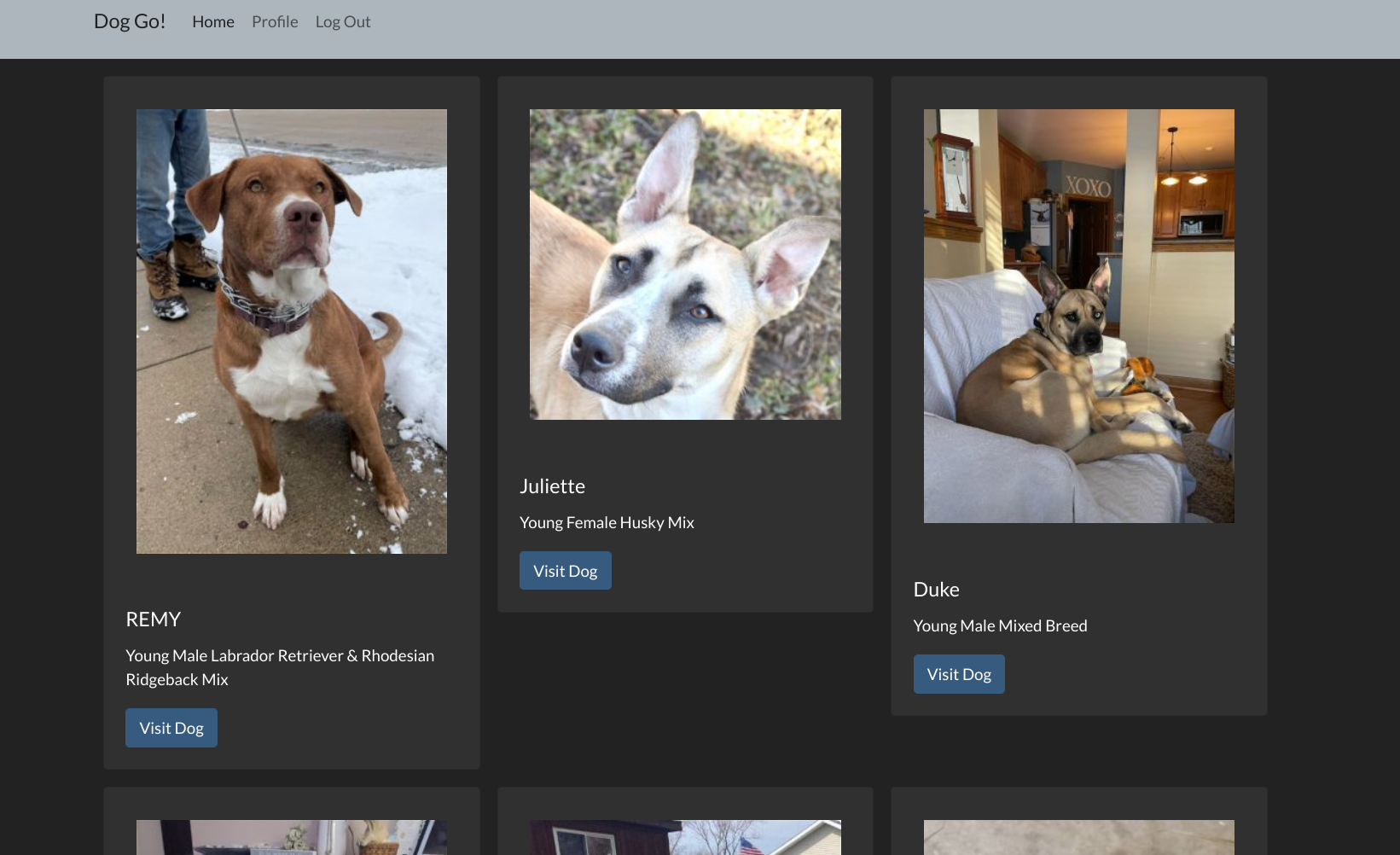

Web Interface

The Django-based web application provides an intuitive interface for browsing collected dog listings. To launch the webapp, users simply copy the old_dogs.csv file to dog_go_webapp/src/data/ and start the Django server using the standard python manage.py runserver command.

The interface presents dog listings in an organized, easy-to-browse format that makes it simple to review multiple dogs quickly. Each listing includes essential information scraped from PetFinder, helping users make informed decisions about potential adoptions.

Technical Implementation

Dog Go leverages several key technologies to provide robust functionality:

- Selenium WebDriver: Enables reliable web scraping of dynamic PetFinder pages

- Pandas: Provides powerful data manipulation and analysis capabilities

- Django: Offers a mature web framework for the user interface

- CSV Storage: Simple, portable data persistence without database complexity

Current Limitations & Future Development

While Dog Go successfully demonstrates the core concept of automated pet listing monitoring, the project represents an early-stage implementation with several areas identified for improvement:

Planned Enhancements

- Dynamic Updates: Integration allowing users to trigger

update_dogs.pydirectly from the webapp, with real-time site updates as new dogs are discovered - Database Migration: Transition from CSV files to a proper database system (SQLite3 or PostgreSQL) for better data management and query capabilities

- Advanced Filtering: Implementation of search and filter functionality to help users efficiently browse through large collections of dog listings

- User Management: Multi-user support allowing each user to maintain personalized search parameters and saved preferences

- Notification System: Email or push notifications when new dogs matching specific criteria become available

Scalability Considerations

Future iterations would also address scalability concerns, including more efficient data storage, improved scraping performance, and better error handling for large-scale deployment. The current architecture provides a solid foundation that can be extended to support these enhanced features.

Dog Go demonstrates how web scraping can solve real-world problems by automating tedious monitoring tasks, ultimately helping connect adoptable dogs with loving families more efficiently.